Logging the training progress¶

This example shows how to use the pymia.evaluation package to log the performance of a neural network during training. The TensorBoard is commonly used to visualize the training in deep learning. We will log the Dice coefficient of predicted segmentations calculated against a reference ground truth to the TensorBoard to visualize the performance of a neural network during the training.

This example uses PyTorch. At the end of it, you can find the required modifications for TensorFlow.

Tip

This example is available as Jupyter notebook at ./examples/evaluation/logging.ipynb and Python scripts for PyTorch and TensorFlow at ./examples/evaluation/logging_torch.py and ./examples/evaluation/logging_tensorflow.py, respectively.

Note

To be able to run this example:

Get the example data by executing ./examples/example-data/pull_example_data.py.

Install torch (

pip install torch).Install tensorboard (

pip install tensorboard).

Further, it might be good to be familiar with Data extraction and assembling and Evaluation of results.

Import the required modules.

[1]:

import os

import numpy as np

import pymia.data.assembler as assm

import pymia.data.backends.pytorch as pymia_torch

import pymia.data.definition as defs

import pymia.data.extraction as extr

import pymia.data.transformation as tfm

import pymia.evaluation.metric as metric

import pymia.evaluation.evaluator as eval_

import pymia.evaluation.writer as writer

import torch

import torch.nn as nn

import torch.utils.data as torch_data

import torch.utils.tensorboard as tensorboard

Let us create a list with the metric to log, the Dice coefficient.

[2]:

metrics = [metric.DiceCoefficient()]

Now, we need to define the labels we want to log during the training. In the provided example data, we have five labels for different brain structures. Here, we are only interested in three of them: white matter, grey matter, and the thalamus.

[3]:

labels = {1: 'WHITEMATTER',

2: 'GREYMATTER',

5: 'THALAMUS'

}

Using the metrics and labels, we can initialize an evaluator.

[4]:

evaluator = eval_.SegmentationEvaluator(metrics, labels)

The evaluator will return results for all subjects in the dataset. However, we would like to log only statistics like the mean and the standard deviation of the metrics among all subjects. Therefore, we initialize a statistics aggregator.

[5]:

functions = {'MEAN': np.mean, 'STD': np.std}

statistics_aggregator = writer.StatisticsAggregator(functions=functions)

PyTorch provides a module to log to the TensorBoard, which we will use.

[6]:

log_dir = '../example-data/log'

tb = tensorboard.SummaryWriter(os.path.join(log_dir, 'logging-example'))

We now initialize the data handling, please refer to the above mentioned example to understand what is going on.

[7]:

hdf_file = '../example-data/example-dataset.h5'

transform = tfm.Permute(permutation=(2, 0, 1), entries=(defs.KEY_IMAGES,))

dataset = extr.PymiaDatasource(hdf_file, extr.SliceIndexing(), extr.DataExtractor(categories=(defs.KEY_IMAGES,)), transform)

pytorch_dataset = pymia_torch.PytorchDatasetAdapter(dataset)

loader = torch_data.dataloader.DataLoader(pytorch_dataset, batch_size=100, shuffle=False)

assembler = assm.SubjectAssembler(dataset)

direct_extractor = extr.ComposeExtractor([

extr.SubjectExtractor(), # extraction of the subject name for evaluation

extr.ImagePropertiesExtractor(), # extraction of image properties (origin, spacing, etc.) for evaluation in physical space

extr.DataExtractor(categories=(defs.KEY_LABELS,)) # extraction of "labels" entries for evaluation

])

Let’s now define a dummy network, which will actually just return a random prediction.

[8]:

class DummyNetwork(nn.Module):

def forward(self, x):

return torch.randint(0, 5, (x.size(0), 1, *x.size()[2:]))

dummy_network = DummyNetwork()

torch.manual_seed(0) # set seed for reproducibility

[8]:

<torch._C.Generator at 0x7f09f951adb0>

We can now start the training loop. We will loop over the samples in our dataset, feed them to the “neural network”, and assemble them to back to entire volumetric predictions. As soon as a prediction is fully assembled, it will be evaluated against its reference. We do this evaluation in the physical space, as the spacing might be important for metrics like the Hausdorff distance (distances in mm rather than voxels). At the end of each epoch, we can calculate the mean and standard deviation of the metrics among all subjects in the dataset, and log them to the TensorBoard. Note that this example is just for illustration because usually you would want to log the performance on the validation set.

[9]:

nb_batches = len(loader)

epochs = 10

for epoch in range(epochs):

print(f'Epoch {epoch + 1}/{epochs}')

for i, batch in enumerate(loader):

# get the data from batch and predict

x, sample_indices = batch[defs.KEY_IMAGES], batch[defs.KEY_SAMPLE_INDEX]

prediction = dummy_network(x)

# translate the prediction to numpy and back to (B)HWC (channel last)

numpy_prediction = prediction.numpy().transpose((0, 2, 3, 1))

# add the batch prediction to the assembler

is_last = i == nb_batches - 1

assembler.add_batch(numpy_prediction, sample_indices.numpy(), is_last)

# process the subjects/images that are fully assembled

for subject_index in assembler.subjects_ready:

subject_prediction = assembler.get_assembled_subject(subject_index)

# extract the target and image properties via direct extract

direct_sample = dataset.direct_extract(direct_extractor, subject_index)

reference, image_properties = direct_sample[defs.KEY_LABELS], direct_sample[defs.KEY_PROPERTIES]

# evaluate the prediction against the reference

evaluator.evaluate(subject_prediction[..., 0], reference[..., 0], direct_sample[defs.KEY_SUBJECT])

# calculate mean and standard deviation of each metric

results = statistics_aggregator.calculate(evaluator.results)

# log to TensorBoard into category train

for result in results:

tb.add_scalar(f'train/{result.metric}-{result.id_}', result.value, epoch)

# clear results such that the evaluator is ready for the next evaluation

evaluator.clear()

Epoch 1/10

Epoch 2/10

Epoch 3/10

Epoch 4/10

Epoch 5/10

Epoch 6/10

Epoch 7/10

Epoch 8/10

Epoch 9/10

Epoch 10/10

You can now start the TensorBoard and point the location to the log directory:

tensorboard --logdir=<path_to_pymia>/examples/example-data/log

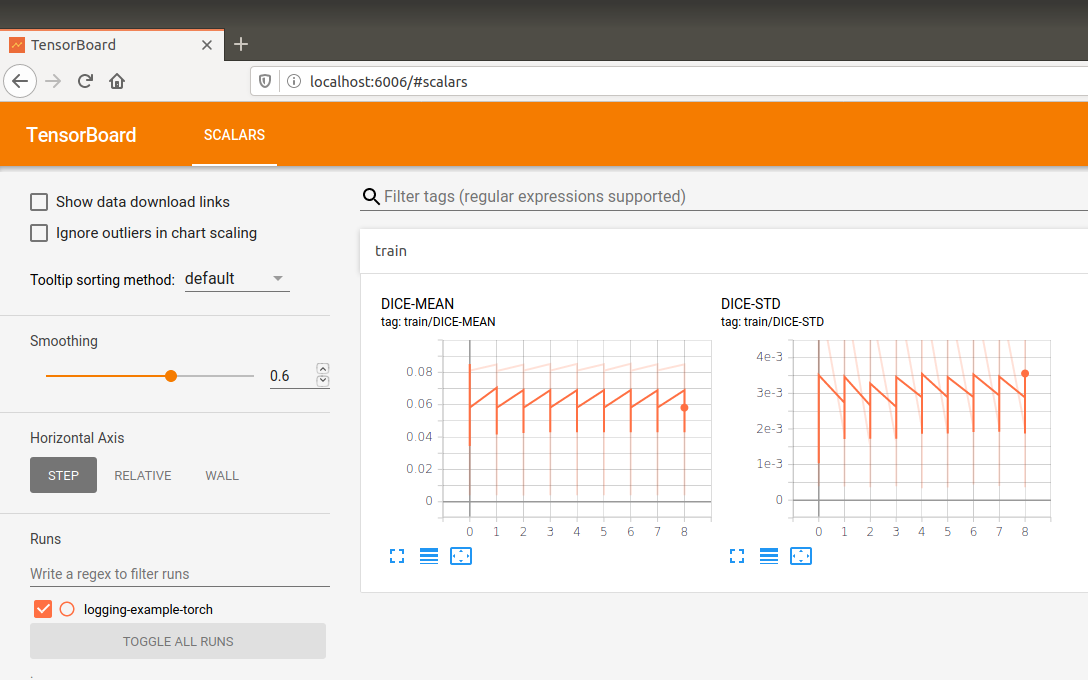

Open a browser and type localhost:6006 to see the logged training progress. It should look similar to the figure below (the data does not make a lot of sense as we create random predictions).

TensorFlow adaptions¶

For the presented logging to work with the TensorFlow framework, only minor modifications are required: (1) Modifications of the imports, (2) framework-specific TensorBoard logging, and (3) framework-specific data handling.

# 1)

import tensorflow as tf

import pymia.data.backends.tensorflow as pymia_tf

# 2)

tb = tf.summary.create_file_writer(os.path.join(log_dir, 'logging-example'))

for result in results:

with tb.as_default():

tf.summary.scalar(f'train/{result.metric}-{result.id_}', result.value, epoch)

# 3)

gen_fn = pymia_tf.get_tf_generator(dataset)

tf_dataset = tf.data.Dataset.from_generator(generator=gen_fn,

output_types={defs.KEY_IMAGES: tf.float32,

defs.KEY_SAMPLE_INDEX: tf.int64})

loader = tf_dataset.batch(100)

class DummyNetwork(tf.keras.Model):

def call(self, inputs):

return tf.random.uniform((*inputs.shape[:-1], 1), 0, 6, dtype=tf.int32)

dummy_network = DummyNetwork()

tf.random.set_seed(0) # set seed for reproducibility

# no permutation transform needed. Thus the lines

transform = tfm.Permute(permutation=(2, 0, 1), entries=(defs.KEY_IMAGES,))

numpy_prediction = prediction.numpy().transpose((0, 2, 3, 1))

# become

transform = None

numpy_prediction = prediction.numpy()